Teach'a'Topic : 'Learning by Teaching' with AI

Teach-a-Topic is a mobile learning concept that helps learners improve by teaching out loud and getting instant AI feedback .

Testing Results: Iterative wireframes made 85% started a teaching session without hesitation and all of them said they’d re-teach, validating an AI listener + focused feedback approach.

Problem & Inspiration

While searching for effective learning strategies for my Master's project, I found that teaching someone delivers the highest retention but is rarely practised.

In a survey of 48 learners, 88 % said teaching improves learning, yet only 8 % actually do it due to scheduling frictions.

High awareness, low usage

88%

8%

Opportunity: Design a system that removes these logistical barriers so learners can focus on learning-by-teaching.

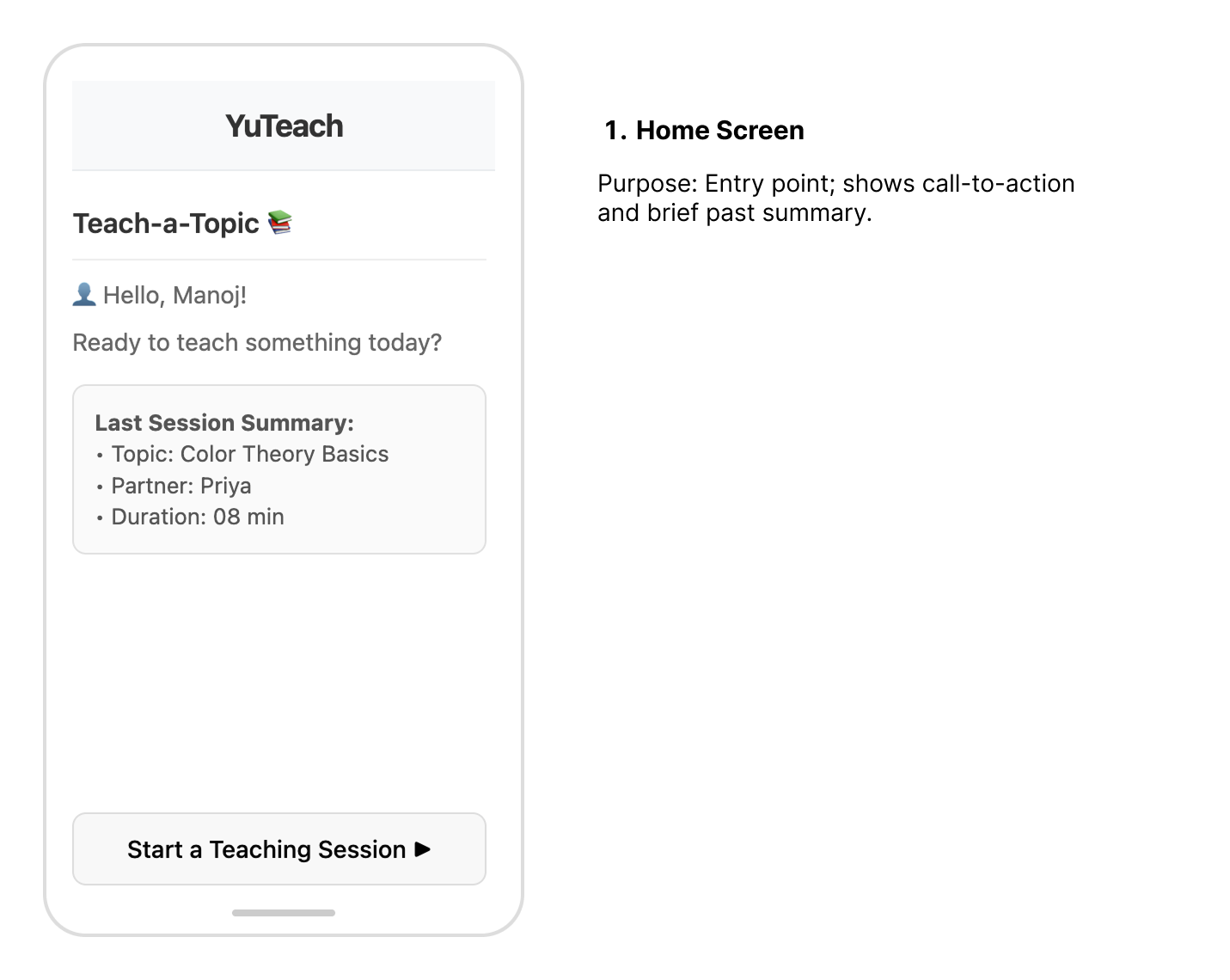

first concept : Human-Matching

My first concept matched learners for short teach sessions using:

What I did

Designed low-fidelity wireframes and tested them with five students to explore the feasibility of the flow:

Outcome: While the flow was clear, overall feedback highlighted significant barriers that limited participants’ willingness to return for another session.

issues identified

- Starting frictions

- Fear of judgement

- Human quality issues

- Coordination friction

Overall negative

Time required

Only 1 out of 5 participants might return

Decision: The experiment showed that a human partner adds friction which cannot be easily mitigated. I decided to pivot to an AI based learning by teaching that removes the human dependency.

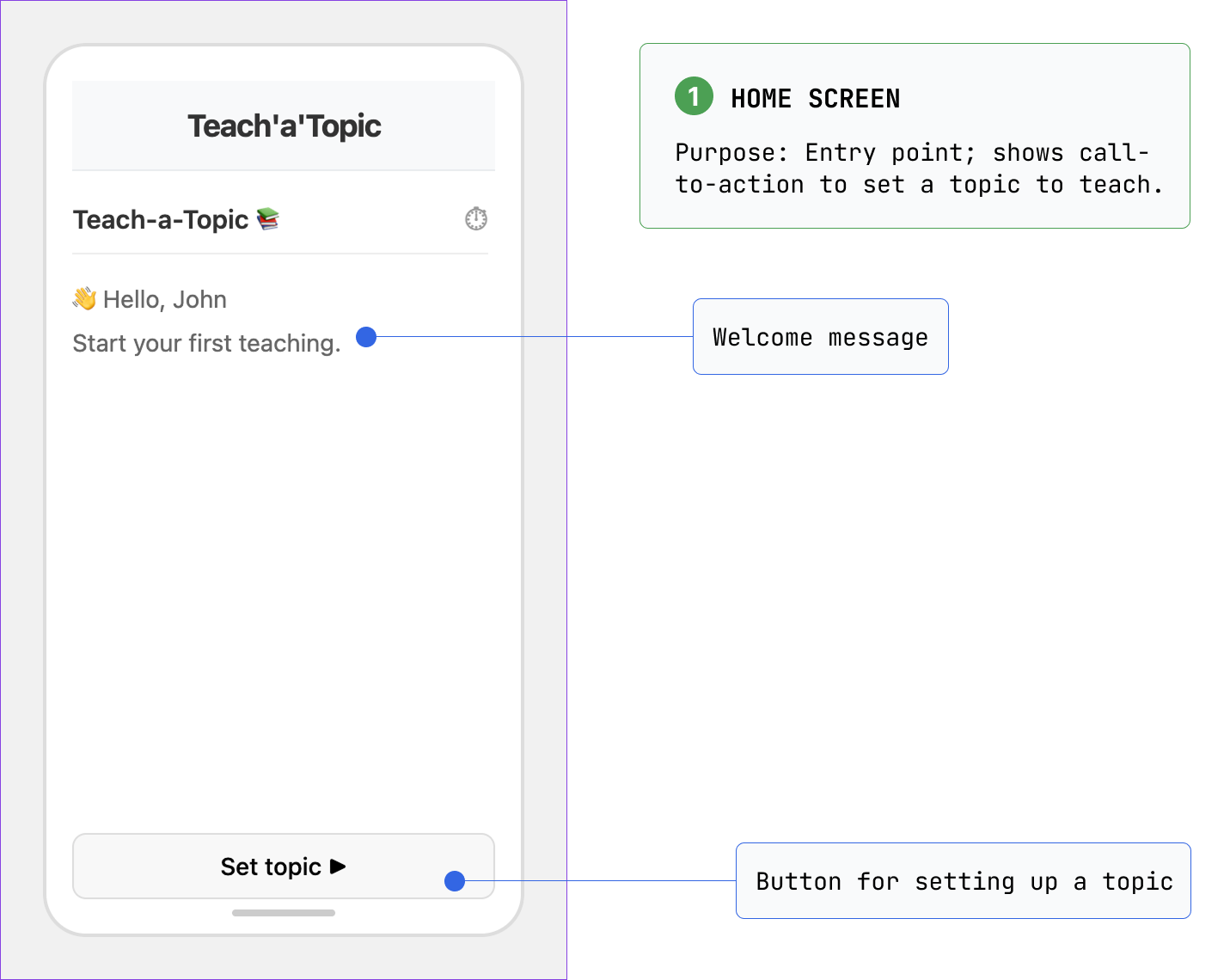

The Pivot: AI Listener

To eliminate human friction , I replaced the human with an AI listener . The AI listens to a learner’s explanation, transcribes it, and provides feedback . It’s always available, non‑judgemental and remembers past sessions .

To make this measurable, I defined two success checks :

- Start friction: Do users begin without hesitation as human matching did?

- Repeat intent: Does the AI feedback feel useful enough to make them return?

This kept the work focused on behavior change , not just feature building.

Testing the AI Concept

I created low-fidelity prototypes across two rounds and iteratively refined them through user testing.

Round 1 (n=5):

Insights

- - Strong start signal: 80% started without hesitation

- - Weak repeat signal: only 40% said they'd return

AI feedback was seen as "interesting" but not actionable for them to know what to do next.

What I improved?

Changes

Feedback now focused on one gap (instead of all at once).

Clear next step (easy to act on).

Thread UI to give a feeling of continuity.

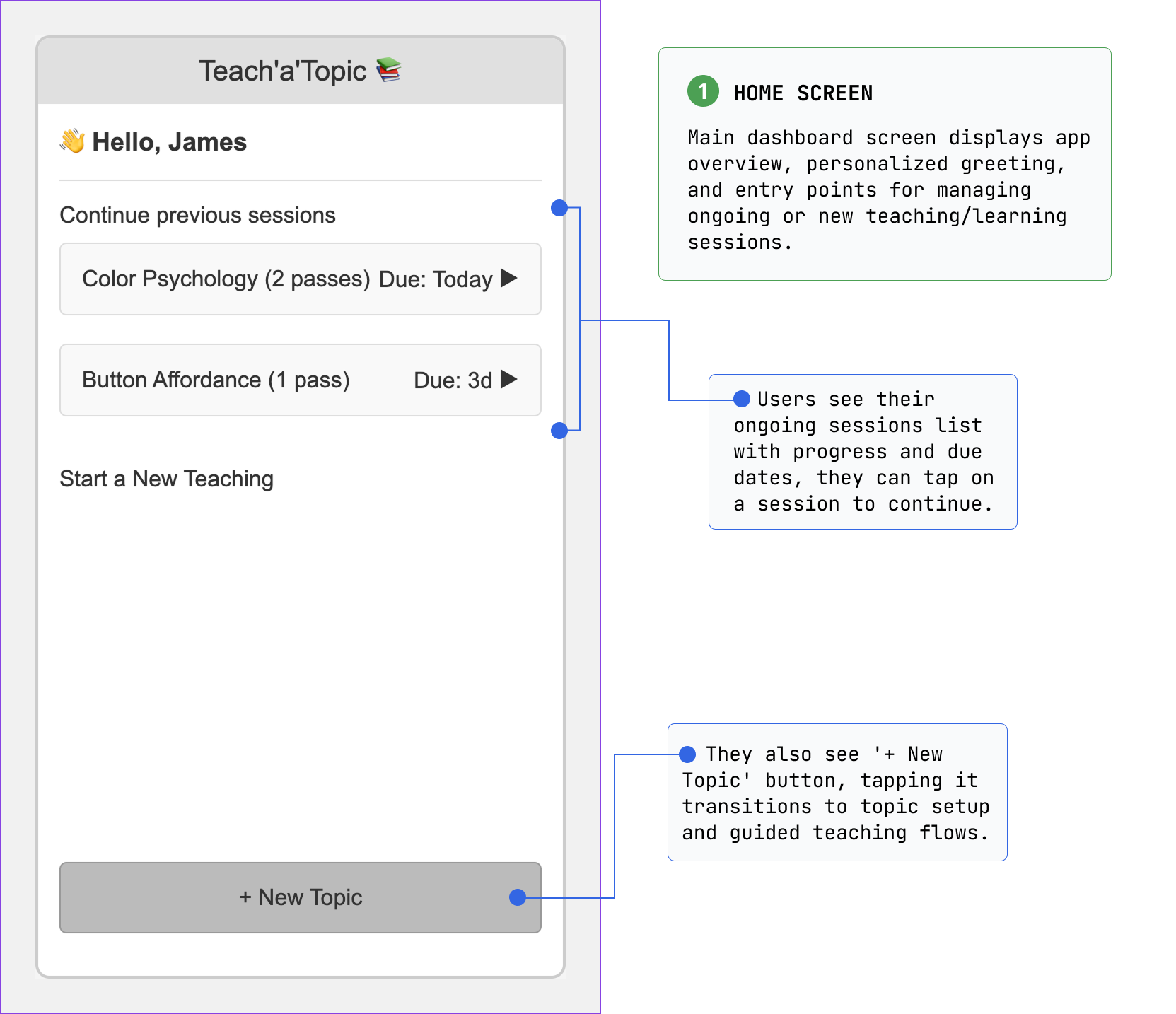

Round 2 (n=7):

Results

85% demonstrated likelihood to return.

85% started without notable hesitation.

Learning: reducing friction gets the first use. Actionable feedback drives repeat behavior.

Final Experience

I mapped user flows, defined key scenarios, and created mood boards to establish the app’s structure and tone, which informed the high fidelity prototype of the core flow.

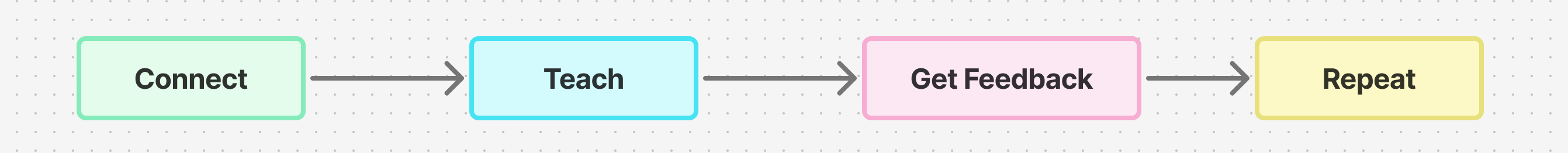

Slide 1 of 3: Flow chart

I tested the hi-fi prototype with 8 participants to find usability issues:

- - During teaching, other UI elements distracted users (focus issue).

- - People wanted richer feedback formats, not only text.

- - Repeating the loop risked feeling boring without variety.

This gave me concrete changes to prioritize before finalizing the screens.

The recording state was simplified to keep attention on explaining the topic, reducing secondary UI distractions.

Slide 1 of 4: Recording Focus

Slide 1 of 4: Setting up

Final high-fidelity prototype:

Final PrototypeWhat This Demonstrates

This project shows my ability to:

- - identify a behavior problem and validate it with evidence,

- - test concepts quickly and pivot based on findings,

- - design for repeat use (not just a one-time interaction),

- - translate insights into a focused, testable product loop.

Future work

- - Building an MVP to test learning outcomes (not just usability).

- - Collaborating with educators to develop credible feedback criteria.

- - Exploring ethical considerations and biases in AI feedback.